In late spring this year, the Barbican Centre in London will explore the promise and perils of artificial intelligence in a festival of films, workshops, concerts, talks and exhibitions. Even before the show opens, however, I have a bone to pick: what on earth induced the organisers to call their show AI: More than human?

More than human? What are we being sold here? What are we being asked to assume, about the technology and about ourselves?

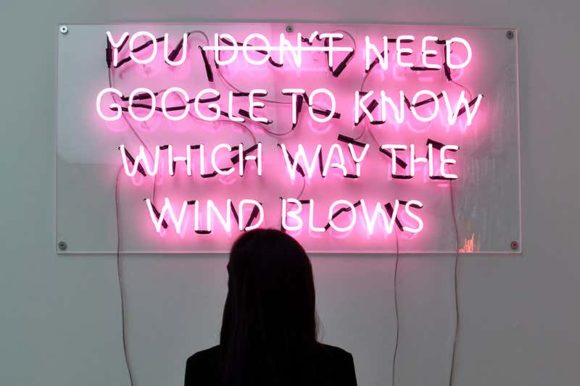

Language is at the heart of the problem. In his 2007 book, The Emotion Machine, computer scientist Marvin Minsky deplored (although even he couldn’t altogether avoid) the use of “suitcase words”: his phrase for words conveying specialist technical detail through simple metaphors. Think what we are doing when we say metal alloys “remember” their shape, or that a search engine offers “intelligent” answers to a query.

Without metaphors and the human tendency to personify, we would never be able to converse, let alone explore technical subjects, but the price we pay for communication is a credulity when it comes to modelling how the world actually works. No wonder we are outraged when AI doesn’t behave intelligently. But it isn’t the program playing us false, rather the name we gave it.

Then there is the problem outlined by Benjamin Bratton, director of the Center for Design and Geopolitics at the University of California, San Diego, and author of cyber bible The Stack. Speaking at Dubai’s Belief in AI symposium last year, he said we use suitcase words from religion when we talk about AI, because we simply don’t know what AI is yet.

For how long, he asked, should we go along with the prevailing hype and indulge the idea that artificial intelligence resembles (never mind surpasses) human intelligence? Might this warp or spoil a promising technology?

The Dubai symposium, organised by Kenric McDowell and Ben Vickers, interrogated these questions well. McDowell leads the Artists and Machine Intelligence programme at Google Research, while Vickers has overseen experiments in neural-network art at the Serpentine Gallery in London. Conversations, talks and screenings explored what they called a “monumental shift in how societies construct the everyday”, as we increasingly hand over our decision-making to non-humans.

Some of this territory is familiar. Ramon Amaro, a design engineer at Goldsmith, University of London, drew the obvious moral from the story of researcher Joy Buolamwini, whose facial-recognition art project The Aspire Mirror refused to recognise her because of her black skin.

The point is not simple racism. The truth is even more disturbing: machines are nowhere near clever enough to handle the huge spread of normal distribution on which virtually all human characteristics and behaviours lie. The tendency to exclude is embedded in the mathematics of these machines, and no patching can fix it.

Yuk Hui, a philosopher who studied computer engineering and philosophy at the University of Hong Kong, broadened the lesson. Rational, disinterested thinking machines are simply impossible to build. The problem is not technical but formal, because thinking always has a purpose: without a goal, it is too expensive a process to arise spontaneously.

The more machines emulate real brains, argued Hui, the more they will evolve – from autonomic response to brute urge to emotion. The implication is clear. When we give these recursive neural networks access to the internet, we are setting wild animals loose.

Although the speakers were well-informed, Belief in AI was never intended to be a technical conference, and so ran the risk of all such speculative endeavours – drowning in hyperbole. Artists using neural networks in their practice are painfully aware of this. One artist absent from the conference, but cited by several speakers, was Turkish-born Memo Akten, based at Somerset House in London.

His neural networks make predictions on live webcam input, using previously seen images to make sense of new ones. In one experiment, a scene including a dishcloth is converted into a Turneresque animation by a recursive neural network trained on seascapes. The temptation to say this network is “interpreting” the view, and “creating” art from it, is well nigh irresistible. It drives Akten crazy. Earlier this year in a public forum he threatened to strangle a kitten whenever anyone in the audience personified AI, by talking about “the AI”, for instance.

It was left to novelist Rana Dasgupta to really put the frighteners on us as he coolly unpicked the state of automated late capitalism. Today, capital and rental income are the true indices of political participation, just as they were before the industrial revolution. Wage rises? Improved working conditions? Aspiration? All so last year. Automation has made their obliteration possible, by reducing to virtually nothing the costs of manufacture.

Dasgupta’s vision of lives spent in subjection to a World Machine – fertile, terrifying, inhuman, unethical, and not in the least interested in us – was also a suitcase of sorts, too, containing a lot of hype, and no small amount of theology. It was also impossible to dismiss.

Cultural institutions dabbling in the AI pond should note the obvious moral. When we design something we decide to call an artificial intelligence, we commit ourselves to a linguistic path we shouldn’t pursue. To put it more simply: we must be careful what we wish for.