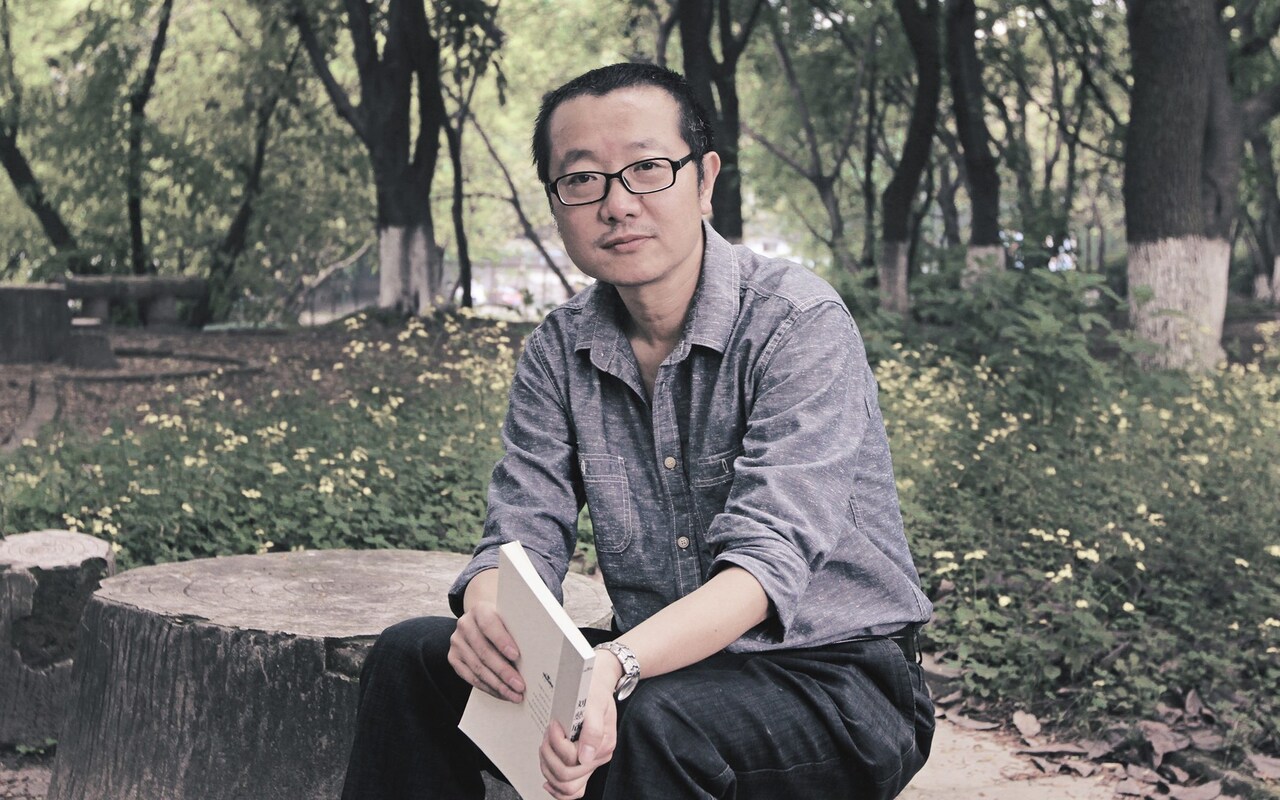

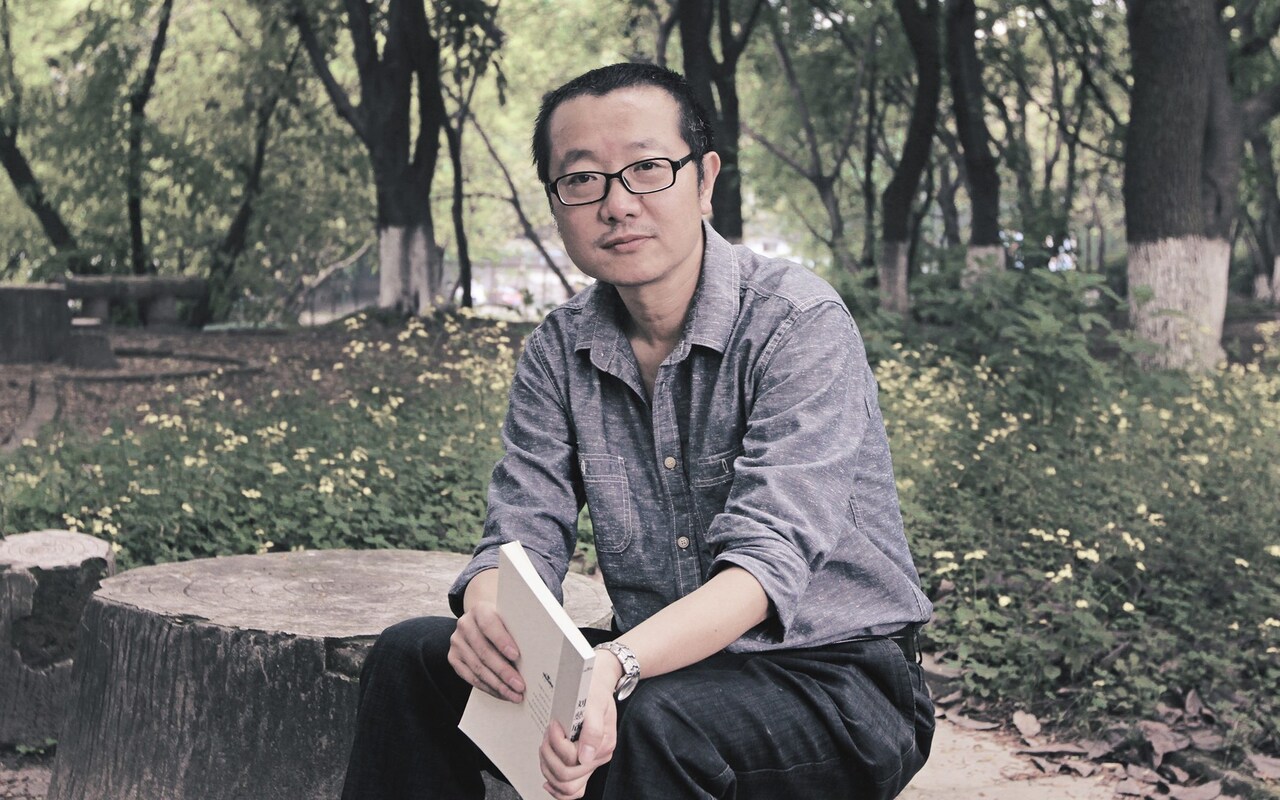

Interviewing Cixin Liu for The Telegraph, 29 February 2024

Chinese writer Cixin Liu steeps his science fiction in disaster and misfortune, even as he insists he’s just playing around with ideas. His seven novels and a clutch of short stories and articles (soon to be collected in a new English translation, A View from the Stars) have made him world-famous. His most well-known novel The Three-Body Problem won the Hugo, the nearest thing science fiction has to a heavy-hitting prize, in 2015. Closer to home, he’s won the Galaxy Award, China’s most prestigious literary science-fiction award, nine times. A 2019 film adaptation of his novella “The Wandering Earth” (in which we have to propel the planet clear of a swelling sun) earned nearly half a billion dollars in the first 10 days of its release. Meanwhile The Three-Body Problem and its two sequels have sold more than eight million copies worldwide. Now they’re being adapted for the screen, and not for the first time: the first two adaptations were domestic Chinese efforts. A 2015 film was suspended during production (“No-one here had experience of productions of this scale,” says Liu, speaking over a video link from a room piled with books.) The more recent TV effort is, from what I’ve seen of it, jolly good, though it only scratches the surface of the first book.

Now streaming service Netflix is bringing Liu’s whole trilogy to a global audience. Clean behind your sofa, because you’re going to need somewhere to hide from an alien visitation quite unlike any other.

For some of us, that invasion will come almost as a relief. So many English-speaking sf writers these days spend their time bending over backwards, offering “design solutions” to real-life planetary crises, and especially to climate change. They would have you believe that science fiction is good for you.

Liu, a bona fide computer engineer in his mid-fifties, is immune to such virtue signalling. “From a technical perspective, sf cannot really help the world,” he says. “Science fiction is ephemeral, because we build it on ideas in science and technology that are always changing and improving. I suppose we might inspire people a little.”

Western media outlets tend to cast Liu — a domestic celebrity with a global reputation and a fantastic US sales record — as a put-upon and presumably reluctant spokesperson for the Chinese Communist Party. The Liu I’m speaking to is garrulous, well-read, iconoclastic, and eager. (It’s his idea that we end up speaking for nearly an hour more than scheduled.) He’s hard-headed about human frailty and global Realpolitik, and he likes shocking his audience. He believes in progress, in technology, and, yes — get ready to clutch your pearls — he believes in his country. But we’ll get to that.

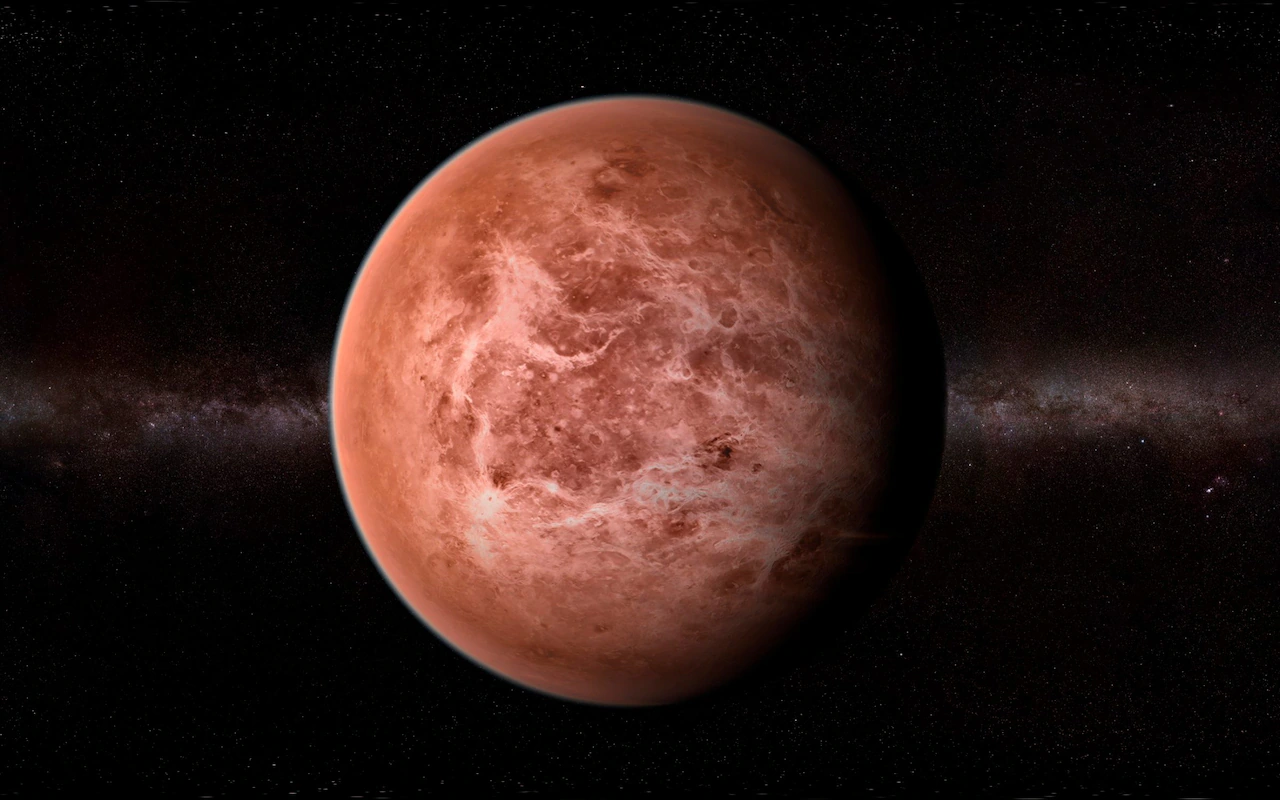

We promised you disaster and misfortune. In The Three-Body Problem, the great Trisolaran Fleet has already set sail from its impossibly inhospitable homeworld orbiting three suns. (What does not kill you makes you stronger, and their madly unpredictable environment has made the Trisolarans very strong indeed.) They’ll arrive in 450 years or so — more than enough time, you would think, for us to develop technology advanced enough to repel them. That is why the Trisolarans have sent two super-intelligent proton-sized super-computers at near-light speed to Earth, to mess with our minds, muddle our reality, and drive us into self-hatred and despair. Only science can save us. Maybe.

The forthcoming Netflix adaptation is produced by Game of Thrones’s David Benioff and D.B. Weiss and True Blood’s Alexander Woo. In covering all three books, it will need to wrap itself around a conflict that lasts millennia, and realistically its characters won’t be able to live long enough to witness more than fragments of the action. The parallel with the downright deathy Game of Thrones is clear: “I watched Game of Thrones before agreeing to the adaptation,” says Liu. “I found it overwhelming — quite shocking, but in a positive way.”

By the end of its run, Game of Thrones had become as solemn as an owl, and that approach won’t work for The Three-Body Problem, which leavens its cosmic pessimism (a universe full of silent, hostile aliens, stalking their prey among the stars) with long, delightful episodes of sheer goofiness — including one about a miles-wide Trisolaran computer chip made up entirely of people in uniform, marching about, galloping up and down, frantically waving flags…

A computer chip the size of a town! A nine-dimensional supercomputer the size of a proton! How on Earth does Liu build engaging stories from such baubles? Well, says Liu, you need a particular kind of audience — one for whom anything seems possible.

“China’s developing really fast, and people are confronting opportunities and challenges that make them think about the future in a wildly imaginative and speculative way,” he explains. “When China’s pace of development slows, its science fiction will change. It’ll become more about people and their everyday experiences. It’ll become more about economics and politics, less about physics and astronomy. The same has already happened to western sf.”

Of course, it’s a moot point whether anything at all will be written by then. Liu reckons that within a generation or two, artificial intelligence will take care of all our entertainment needs. “The writers in Hollywood didn’t strike over nothing,” he observes. “All machine-made entertainment requires, alongside a few likely breakthroughs, is ever more data about what people write and consume and enjoy.” Liu, who claims to have retired and to have no skin in this game any more, points to a recent Chinese effort, the AI-authored novel Land of Memories, which won second prize in a regional sf competition. “I think I’m the final generation of writers who will create novels based purely on their own thinking, without the aid of artificial intelligence,” he says. “The next generation will use AI as an always-on assistant. The generation after that won’t write.”

Perhaps he’s being mischievous (a strong and ever-present possibility). He may just be spinning some grand-sounding principle out of his own charmingly modest self-estimate. “I’m glad people like my work,” he says, “but I doubt I’ll be remembered even ten years from now. I’ve not written very much. And the imagination I’ve been able to bring to bear on my work is not exceptional.” His list of influences is long. His father bought him Wells and Verne in translation. Much else, including Kurt Vonnegut and Ray Bradbury, required translating word for word with a dictionary. “As an sf writer, I’m optimistic about our future,” Liu says. “The resources in our solar system alone can feed about 100,000 planet Earths. Our future is potentially limitless — even within our current neighbourhood.”

Wrapping our heads around the scales involved is tricky, though. “The efforts countries are taking now to get off-world are definitely meaningful,” he says, “but they’re not very realistic. We have big ideas, and Elon Musk has some exciting propulsion technology, but the economic base for space exploration just isn’t there. And this matters, because visiting neighbouring planets is a huge endeavour, one that makes the Apollo missions of the Sixties and Seventies look like a fast train ride.”

Underneath such measured optimism lurks a pessimistic view of our future on Earth. “More and more people are getting to the point where they’re happy with what they’ve got,” he complains. “They’re comfortable. They don’t want to make any more progress. They don’t want to push any harder. And yet the Earth is pretty messed up. If we don’t get into space, soon we’re not going to have anywhere to live at all.”

The trouble with writing science fiction is that everyone expects you have an instant answer to everything. Back in June 2019, a New Yorker interviewer asked him what he thought of the Uighurs (he replied: a bunch of terrorists) and their treatment at the hands of the Chinese government (he replied: firm but fair). The following year some Republican senators in the US tried to shame Netflix into cancelling The Three-Body Problem. Netflix pointed out (with some force) that the show was Benioff and Weiss and Woo’s baby, not Liu’s. A more precious writer might have taken offence, but Liu thinks Netflix’s response was spot-on. ““Neither Netflix nor I wanted to think about these issues together,” he says.

And it doesn’t do much good to spin his expression of mainstream public opinion in China (however much we deplore it) into some specious “parroting [of] dangerous CCP propaganda”. The Chinese state is monolithic, but it’s not that monolithic — witness the popular success of Liu’s own The Three Body Problem, in which a girl sees her father beaten to death by a fourteen-year-old Red Guard during the Cultural Revolution, grows embittered during what she expects will be a lifetime’s state imprisonment, and goes on to betray the entire human race, telling the alien invaders, “We cannot save ourselves.”

Meanwhile, Liu has learned to be ameliatory. In a nod to Steven Pinker’s 2011 book The Better Angels of Our Nature, he points out that while wars continue around the globe, the bloodshed generated by warfare has been declining for decades. He imagines a world of ever-growing moderation — even the eventual melting away of the nation state.

When needled, he goes so far as to be realistic: “No system suits all. Governments are shaped by history, culture, the economy — it’s pointless to argue that one system is better than another. The best you can hope for is that they each moderate whatever excesses they throw up. People are not and never have been free to do anything they want, and people’s idea of what constitutes freedom changes, depending on what emergency they’re having to handle.”

And our biggest emergency right now? Liu picks the rise of artificial intelligence, not because our prospects are so obviously dismal (though killer robots are a worry), but because mismanaging AI would be humanity’s biggest own goal ever: destroyed by the very technology that could have taken us to the stars!

Ungoverned AI could quite easily drive a generation to rebel against technology itself. “AI has been taking over lots of peoples’ jobs, and these aren’t simple jobs, these are what highly educated people expected to spend lifetimes getting good at. The employment rate in China isn’t so good right now. Couple that with badly managed roll-outs of AI, and you’ve got frustration and chaos and people wanting to destroy the machines, just as they did at the beginning of the industrial revolution.”

Once again we find ourselves in a dark place. But then, what did you expect from a science fiction writer? They sparkle best in the dark. And for those who don’t yet know his work, Liu is pleased, so far, with Netflix’s version of his signature tale of interstellar terror, even if its westernisation does baffle him at times.

“All these characters of mine that were scientists and engineers,” he sighs. “They’re all politicians now. What’s that about?”