Reading Carlo Rovelli’s Anaximander and the Nature of Science for New Scientist, 8 March 2023

Astronomy was conducted at Chinese government institutions for more than 20 centuries, before Jesuit missionaries turned up and, somewhat bemused, pointed out that the Earth is round.

Why, after so much close observation and meticulous record-keeping did seventeenth-century Chinese astronomers still think the Earth was flat?

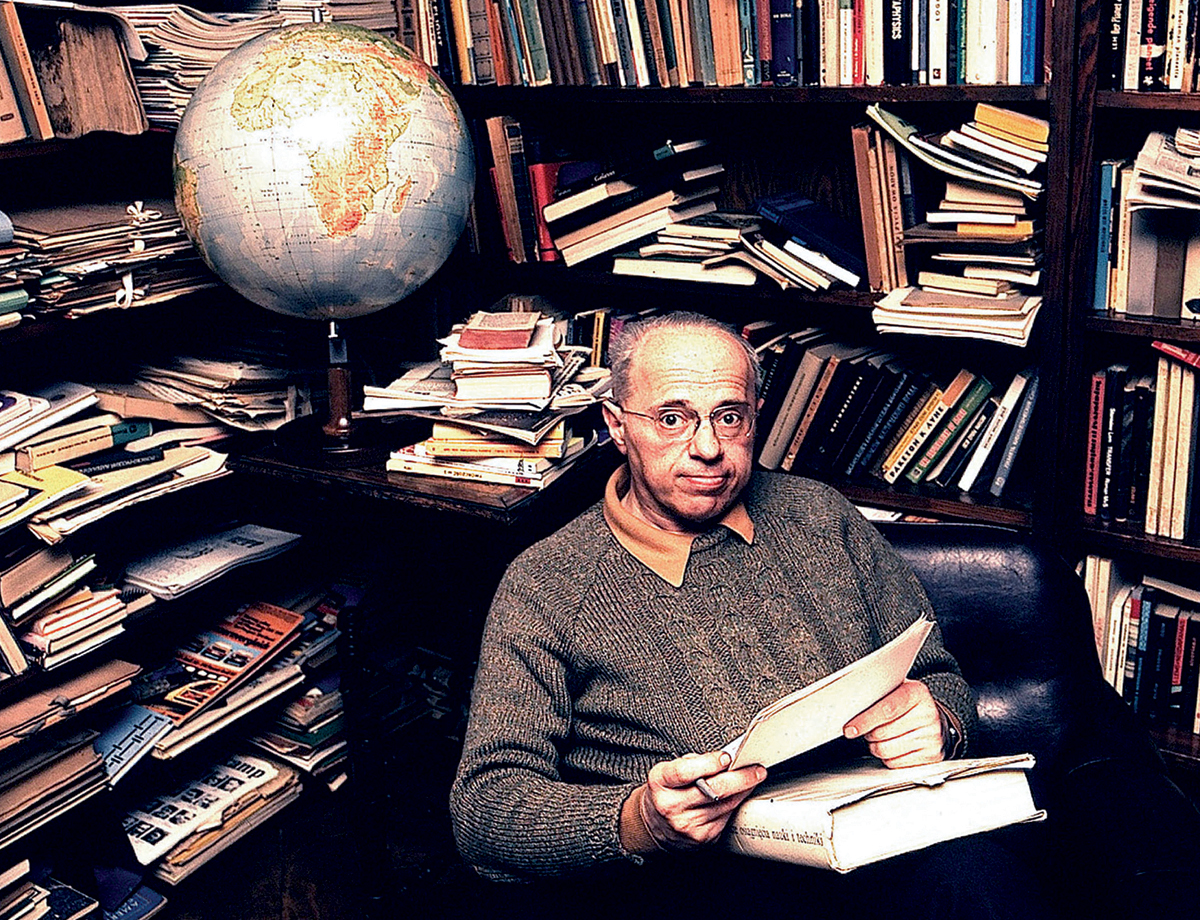

The theoretical physicist Carlo Rovelli, writing in 2007 (this is an able and lively translation of his first book) can be certain of one thing: “that the observation of celestial phenomena over many centuries, with the full support of political authorities, is not sufficient to lead to clear advances in understanding the structure of the world.”

So what gave Europe its preternaturally clear-eyed idea of how physical reality works? Rovelli’s ties his several answers — covering history, philosophy, politics and religion — to the life and thought and work of Anaximander, who was born 26 centuries ago in the cosmopolitan city (population 100,000) of Miletus, on the coast of present-day Turkey.

We learn about Anaximander, born 610 BCE, mostly through Aristotle. The only treatise of his we know about is now lost, aside from a tantalising fragment that reveals Anaximander’s notion that there exist natural laws that organise phenomena through time. He also figured out where wind and rain came from, and deduced, from observation, that all animals originally came from the sea, and must have arisen from fish or fish-like creatures.

Rovelli is not interested in startling examples of apparent prescience. Even a stopped watch is correct twice a day. He is positively enchanted, though, by the quality of Anaximander’s thought.

Consider the philosopher’s most famous observation — that the Earth is a finite body of rock floating freely in space.

Anaximander grasps that there is a void beneath the Earth through which heavenly bodies (the sun, to take an obvious example) must travel when they roll out of sight. This is really saying not much more than that, when a man walks behind a house, he’ll eventually reappear on the other side.

What makes this “obvious” observation so radical is that, applied to heavenly bodies, it contradicts our everyday experience.

In everyday life, objects fall in one direction. The idea that space does not have a privileged direction in which objects fall runs against common sense.

So Anaximander arrives at a concept of gravity: he calls it “domination”. Earth hangs in space without falling because does not have any particular direction in which to fall, and that is because there’s nothing around big enough to dominate it. You and I are much smaller than the earth, and so we fall towards it. “Up” and “down” are no longer absolutes. They are relative.

The second half of Rovelli’s book (less thrilling, and more trenchant, perhaps to compensate for the fact that it covers more familiar territory) explains how science, evolving out of Anaximander’s constructive yet critical attitude towards his teacher Thales, developed a really quite unnatural way of thinking.

Thales, says Anaximander, was a wise man who was wrong about everything being made of water. The idea that we can be wise and wrong at the same time, Rovelli says, can come only from a sophisticated theory of knowledge “according to which truth is accessible but only gradually, by means of successive refinements.”

All Rovelli’s wit and intellectual dexterity are in evidence in this thrilling early work, and almost all his charm, as he explains how Copernicus perfects Ptolemy, by applying Ptolemy’s mathematics to a better-framed question, and how Einstein perfected Newton by pushing Newton’s mathematics past certain a priori assumptions.

Nothing is thrown away in such scientific “revolutions”. Everything is repurposed.