Reading Sentient by Jackie Higgins for the Times, 19 June 2021

In May 1971 a young man from Portsmouth, Ian Waterman, lost all sense of his body. He wasn’t just numb. A person has a sense of the position of their body in space. In Waterman, that sense fell away, mysteriously and permanently.

Waterman, now in his seventies, has learned to operate his body rather as the rest of us operate a car. He has executive control over his movements, but no very intimate sense of what his flesh is up to.

What must this be like?

In a late chapter of her epic account of how the senses make sense, and exhibiting the kind of left-field thinking that makes for great TV documentaries, writer-director-producer Jackie Higgins goes looking for answers among the octopuses.

The octopus’s brain, you see, has no fine control over its arms. They pretty much do their own thing. They do, though, respond to the occasional high-level executive order. “Tally-ho!” cries the brain, and the arms gallop off, the brain in no more (or less) control of its transport system than a girl on a pony at a gymkhana.

Is being Ian Waterman anything like being an octopus? Attempts to imagine our way into other animals’ experiences — or other people’s experience, for that matter — have for a long time fallen under the shadow of an essay written in 1974 by American philosopher Thomas Nagel.

“What Is It Like to Be a Bat?” wasn’t about bats so much as to do with consciousness (continuity of). I can, with enough tequila inside me) imagine what it would be like for me to be a bat. But that’s not the same as knowing what’s it’s like for a bat to be a bat.

Nagel’s lesson in gloomy solipsism is all very well in philosophy. Applied to natural history, though — where even a vague notion of what a bat feels like might help a naturalist towards a moment of insight — it merely sticks the perfect in the way of the good. Every sparky natural history writer cocks a snook at poor Nagel whenever the opportunity arises.

Advances in media technology over the last twenty years (including, for birds, tiny monitor-stuffed backpacks) have deluged us in fine-grained information about how animals behave. We now have a much better idea of what (and how) they feel.

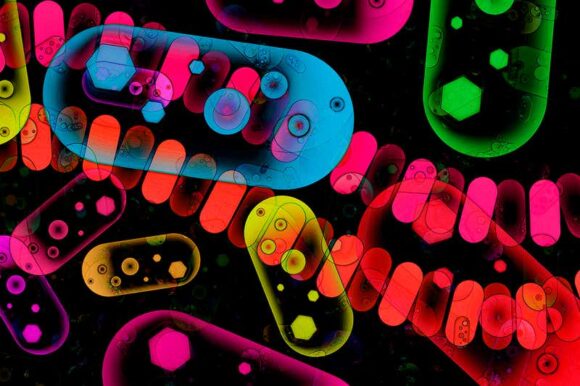

Now, you can take this sort of thing only so far. The mantis shrimp (not a shrimp; a scampi) has up to sixteen kinds of narrow-band photoreceptor, each tuned to a different wavelength of light! Humans only have three. Does this mean that the mantis shrimp enjoys better colour vision than we do?

Nope. The mantis shrimp is blind to colour, in the human sense of the word, perceiving only wavelengths. The human brain meanwhile, by processing the relative intensities of those three wavelengths of colour vision, distinguishes between millions of colours. (Some women have four colour receptors, which is why you should never argue with a woman about which curtains match the sofa.)

What about the star-nosed mole, whose octopus-like head is a mass of feelers? (Relax: it’s otherwise quite cute, and only about 2cm long.) Its weird nose is sensitive: it gathers the same amount of information about what it touches, as a regular rodent’s eye gathers about what it sees. This makes the star-nosed mole the fastest hunter we know of, identifying and capturing prey (worms) in literally less than an eyeblink.

What can such a creature tell us about our own senses? A fair bit, actually. That nose is so sensitive, the mole’s visual cortex is used the process the information. It literally sees through its nose.

But that turns out not to be so very strange: Braille readers, for example, really do read through their fingertips, harnessing their visual cortex to the task. One veteran researcher, Paul Bach-y-Rita, has been building prosthetic eyes since the 1970s, using glorified pin-art machines to (literally) impress the visual world upon his volunteers’ backs, chests, even their tongues.

From touch to sound: in the course of learning about bats, I learned here that blind people have been using echolocation for years, especially when it rains (more auditory information, you see); researchers are only now getting a measure of their abilities.

How many senses are there that we might not have noticed? Over thirty, it seems, all served by dedicated receptors, and many of them elude our consciousness entirely. (We may even share the magnetic sense enjoyed by migrating birds! But don’t get too excited. Most mammals seem to have this sense. Your pet dog almost always pees with its head facing magnetic north.)

This embarrassment of riches leaves Higgins having to decide what to include and what to leave out. There’s a cracking chapter here on how animals sense time, and some exciting details about a sense of touch common to social mammals: one that responds specifically to cuddling.

On the other hand there’s very little about our extremely rare ability to smell what we eat while we eat it. This retronasal olfaction gives us a palate unrivalled in the animal kingdom, capable of discriminating between nearly two trillion savours: and ability which has all kinds of implications for memory and behaviour.

Is this a problem? Not at all. For all that it’s stuffed with entertaining oddities, Sentient is not a book about oddities, and Higgins’s argument, though colourful, is rigorous and focused. Over 400 exhilarating pages, she leads us to adopt an entirely unfamiliar way of thinking about the senses.

Because their mechanics are fascinating and to some degree reproduceable (the human eye is, mechanically speaking, very much like a camera) we grow up thinking of the senses as mechanical outputs.

Looking at our senses this way, however, is rather like studying fungi but only looking at the pretty fruiting bodies. The real magic of fungi is their networks. And the real magic of our senses is the more than 100 billion nerve cells in each human nervous system — greater, Higgins says, than the number of stars in the Milky Way.

And that vast complexity — adapting to reflect and organise the world, not just over evolutionary time but also over the course of an individual life — gives rise to all kinds of surprises. In some humans, the ability to see with sound. In vampire bats (who can sense the location of individual veins to sink their little fangs into), the ability to detect heat using receptors that in most other mammals are used to detect acute pain.

In De Anima, the ancient philosopher Aristotle really let the side down in listing just five senses. No one expects him to have spotted exotica like cuddlesomeness and where-to-face-when-you-pee. But what about pain? What about balance? What about proprioception?

Aristotle’s restrictive and mechanistic list left him, and generations after him, with little purchase on the subject. Insights have been hard to come by.

Aristotle himself took one look at the octopus and declared it stupid.

Let’s see him driving a car with eight legs.