The revolt against scentlessness has been gathering for a while. Muchembled namechecks avant garde perfumes with names like Bat and Rhinoceros. A dear friend of mine favours Musc Kublai Khan for its faecal notes. Another spends a small fortune to smell like cat’s piss. Right now I’m wearing Andy Tauer’s Orange Star — don’t approach unless you like Quality Street orange cremes macerated in petrol…

Category Archives: books

Cog ergo sum

Reading Matthew Cobb’s The Idea of the Brain for New Scientist 15 April 2020

Ask a passer-by in 2nd-century Rome where consciousness resided — in the heart or in the head — and he was sure to say, in the heart. The surgeon-philosopher Galen of Pergamon had other ideas. During one show he had someone press upon the exposed brain of a pig, which promptly (and mercifully) passed out. Letting go brought the pig back to consciousness.

Is the brain one organ, or many? Are our mental faculties localised in the brain? 1600 years after, Galen a Parisian gentleman tried to blow his brains out with a pistol. Instead he shot away his frontal bone, while leaving the anterior lobes of his brain bare but undamaged. He was rushed to the Hôpital St. Louis, where Ernest Aubertin spent a few vain hours trying to save his life. Aubertin discovered that if he pressed a spatula on the patient’s brain while he was speaking, his speech “was suddenly suspended; a word begun was cut in two. Speech returned as soon as pressure was removed,” Aubertin reported.

Does the brain contain all we are? Eighty years after Aubertin, Montreal neurosurgeon Wilder Penfield was carrying out hundreds of brain operations to relieve chronic temporal-lobe epilepsy. Using delicate electrodes, he would map the safest cuts to make — ones that would not excise vital brain functions. For the patient, the tiniest regions, when stimulated, accessed the strangest experiences. A piano being played. A telephone conversation between two family members. A man and a dog walking along a road. They weren’t memories, so much as dreamlike glimpses of another world.

Cobb’s history of brain science will fascinate readers quite as much as it occasionally horrifies. Cobb, a zoologist by training, has focused for much of his career on the sense of smell and the neurology of the humble fruit fly maggot. The Idea of the Brain sees him coming up for air, taking in the big picture before diving once again into the minutiae of his profession.

He makes a hell of a splash, too, explaining how the analogies we use to describe the brain both enrich our understanding of that mysterious organ, and hamstring our further progress. He shows how mechanical metaphors for brain function lasted well into the era of electricity. And he explains why computational metaphors, though unimaginably more fertile, are now throttling his science.

Study the brain as though it were a machine and in the end (and after much good work) you will run into three kinds of trouble.

First you will find that reverse engineering very complex systems is impossible. In 2017 two neuroscientists, Eric Jonas and Konrad Paul Kording employed the techniques they normally used to analyse the brain to study the Mos 6507 processor — a chip found in computers from the late 1970s and early 1980s that enabled machines to run video games such as Donkey Kong, Space Invaders or Pitfall. Despite their powerful analytical armoury, and despite the fact that there is a clear explanation for how the chip works, they admitted that their study fell short of producing “a meaningful understanding”.

Another problem is the way the meanings of technical terms expand over time, warping the way we think about a subject. The French neuroscientist Romain Brette has a particular hatred for that staple of neuroscience, “coding”, an term first invoked by Adrian in the 1920s in a technical sense, in which there is a link between a stimulus and the activity of the neuron. Today almost everybody think of neural codes as representions of that stimulus, which is a real problem, because it implies that there must be an ideal observer or reader within the brain, watching and interpreting those representations. It may be better to think of the brain as constructing information, rather than simply representing it — only we have no idea (yet) how such an organ would function. For sure, it wouldn’t be a computer.

Which brings us neatly to our third and final obstacle to understanding the brain: we take far too much comfort and encouragement from our own metaphors. Do recent advances in AI bring us closer to understanding how our brains work? Cobb’s hollow laughter is all but audible. “My view is that it will probably take fifty years before we understand the maggot brain,” he writes.

One last history lesson. In the 1970s, twenty years after Penfield electrostimulation studies, Michael Gazzaniga, a cognitive neuroscientist at the University of California, Santa Barbara, studied the experiences of people whose brains had been split down the middle in a desperate effort to control their epilepsy. He discovered that each half of the brain was, on its own, sufficient to produce a mind, albeit with slightly different abilities and outlooks in each half. “From one mind, you had two,” Cobb remarks. “Try that with a computer.”

Hearing the news brought veteran psychologist William Estes to despair: “Great,” he snapped, “now we have two things we don’t understand.”

Judging the Baillie Gifford

This year I’ll be helping judge the Baillie Gifford prize for non-fiction. Radio 4 presenter Martha Kearney will chair the panel. Fellow judges are professor and author Shahidha Bari, New Statesmen writer Leo Robson, New York Times editor Max Strasser and journalist and author Bee Wilson. The winner will be announced on Thursday 19 November.

Pluck

Early one morning in October 1874, a barge carrying three barrels of benzoline and five tons of gunpowder blew up in the Regent’s Canal, close to London Zoo. The crew of three were killed outright, scores of houses were badly damaged, the explosion could be heard 25 miles away, and “dead fish rained from the sky in the West End.”

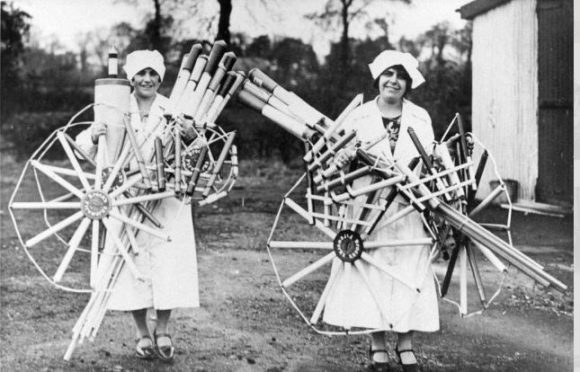

This is a book about the weird, if obvious, intersection between firework manufacture and warfare. It is, ostensibly, the biography of a hero of the First World War, Frank Brock. And if it were the work of more ambitious literary hands, Brock would have been all you got. His heritage, his school adventures, his international career as a showman, his inventions, his war work, his violent death. Enough for a whole book, surely?

But Gunpowder and Glory is not a “literary” work, by which I mean it is neither self-conscious nor overwrought. Instead Henry Macrory (who anyway has already proved his literary chops with his 2018 biography of the swindler Whitaker Wright) has opted for what looks like a very light touch here, assembling and ordering the anecdotes and reflections of Frank Brock’s grandson Harry Smee about his family, their business as pyrotechnical artists, and, finally, about Frank, his illustrious forebear.

I suspect a lot of sweat went into such artlessness, and it’s paid off, creating a book that reads like fascinating dinner conversation. Reading its best passages, I felt I was discovering Brock the way Harry had as a child, looking into his mother’s “ancient oak chests filled with papers, medals, newspapers, books, photographs, an Intelligence-issue knuckleduster and pieces of Zeppelin and Zeppelin bomb shrapnel.”

For eight generations, the Brock family produced pyrotechnic spectaculars of a unique kind. Typical set piece displays in the eighteenth century included “Jupiter discharging lightning and thunder, Two gladiators combating with fire and sword, and Neptune finely carv’d seated in his chair, drawn by two sea horses on fire-wheels, spearing a dolphin.”

Come the twentieth century, Brock’s shows were a signature of Empire. It would take a write like Thomas Pynchon to do full justice to “a sixty foot-high mechanical depiction of the Victorian music-hall performer, Lottie Collins, singing the chorus of her famous song ‘Ta-ra-ra-boom-de-ay’ and giving a spirited kick of an automated leg each time the word ‘boom’ rang out.”

Frank was a Dulwich College boy, and one of that generation lost to the slaughter of the Great War. A spy and an inventor — James Bond and Q in one — he applied his inherited chemical and pyrotechnical genius to the war effort — by making a chemical weapon. It wasn’t any good, though: Jellite, developed during the summer of 1915 and named after its jelly-like consistency during manufacture, proved insufficiently lethal.

On such turns of chance do reputations depend, since we remember Frank Brock for his many less problematic inventions. Dover flares burned for seven and a half minutes

and lit up an area of three miles radius, as Winston Churchill put it, “as bright as Piccadilly”. U boats, diving to avoid these lights, encountered mines. Frank’s artificial fogs, hardly bettered since, concealed whole British fleets, entire Allied battle lines.

Then there are his incendiary bullets.

At the time of the Great War a decent Zeppelin could climb to 20,000 feet, travel at 47 mph for more than 1,000 miles, and stay aloft for 36 hours. Smee and Mcrory are well within their rights to call them “the stealth bombers of their time”.

Brock’s bullets tore them out of the sky. Sir William Pope, Brock’s advisor, and a professor of chemistry at Cambridge University, explained: “You need to imagine a bullet proceeding at several thousand feet a second, and firing as it passes through a piece of fabric which is no thicker than a pocket handkerchief.” All to rupture a gigantic sac of hydrogen sufficiently to make the gas explode. (Much less easy than you think; the Hindenburg only crashed because its entire outer envelope was set on fire.)

Frank died in an assault on the mole at Zeebrugge in 1918. He shouldn’t have been there. He should have been in a lab somewhere, cooking up another bullet, another light, another poison gas. Today, he surely would be suitably contained, his efforts efficiently channeled, his spirit carefully and surgically broken.

Frank lived at a time when it was possible — and men, at any rate, were encouraged — to be more than one thing. That this heroic idea overreached itself — that rugby field and school chemistry lab both dissolved seamlessly into the Somme — needs no rehearsing.

Still, we have lost something. When Frank went to school there was a bookstall near the station which sold “a magazine called Pluck, containing ‘the daring deeds of plucky sailors, plucky soldiers, plucky firemen, plucky explorers, plucky detectives, plucky railwaymen, plucky boys and plucky girls and all sorts of conditions of British heroes’.”

Frank was a boy moulded thus, and sneer as much as you want, we will not see his like again.

Are you experienced?

Reading Wildhood by Barbara Natterson-Horowitz and Kathryn Bowers for New Scientist, 18 March 2020

A king penguin raised on Georgia Island, off the coast of Antarctica. A European wolf. A spotted hyena in the Ngorongoro crater in Tanzania. A north Atlantic humpback whale born near the Dominican Republic. What could these four animals have in common?

What if they were all experiencing the same life event? After all, all animals are born, and all of them die. We’re all hungry sometimes, for food, or a mate.

How far can we push this idea? Do non-human animals have mid-life crises, for example? (Don’t mock; there’s evidence that some primates experience the same happiness curves through their life-course as humans do.)

Barbara Natterson-Horowitz, an evolutionary biologist, and Kathryn Bowers, an animal behaviorist, have for some years been devising and teaching courses at Harvard and the University of California at Los Angeles, looking for “horizontal identities” across species boundaries.

The term comes from Andrew Solomon’s 2014 book Far from the Tree, which contrasts vertical identities (between you and your parents and grandparents, say) with horizontal identities, which are “those among peers with whom you share similar attributes but no family ties”. The authors of Wildhood have expanded Solomon’s concept to include other species; “we suggest that adolescents share a horizontal identity,” they write: “temporary membership in a planet-wide tribe of adolescents.”

The heroes of Wildhood — Ursula the penguin, Shrink the hyena, Salt the whale and Slavc the wolf are all, (loosely speaking) “teens”, and like teens everywhere, they have several mountains to climb at once. They must learn how to stay safe, how to navigate social hierarchies, how to communicate sexually, and how to care for themselves. They need to become experienced, and for that, they need to have experiences.

Well into the 1980s, researchers were discouraged from discussing the mental lives of animals. The change in scientific culture came largely thanks to the video camera. Suddenly it was possible for behavioral scientists to observe, not just closely, but repeatedly, and in slow motion. Soon discoveries arose that could not possibly have been arrived at with the naked eye alone. An animal’s supposedly rote, mechanical behaviours turned out to be the product of learning, experiment, and experience. Stereotyped calls and gestures were unpacked to reveal, not language in the human sense, but languages nonetheless, and many were of dizzying complexity. Animals that we thought were driven by instinct (a word you’ll never even hear used these days), turned out to be lively, engaged, conscious beings, scrabbling for purchase in a confusing and unpredictable world.

The four tales that make up the bulk of Wildhood are more than “Just So” stories. “Every detail,” the authors explain, “is based on and validated by data from GPS satellite or radio collar studies, peer-reviewed scientific literature, published reports and interviews with the investigators involved”.

In addition, each offers a different angle on a wealth of material about animal behaviour. Examples of animal friendship, bullying, nepotism, exploitation and even property inheritance arrive in such numbers and at such a level of detail, it takes an ordinary, baggy human word like “friendship” or “bullying” to contain them.

“Level playing fields don’t exist in nature”, the authors assert, and this is an important point, given the book’s central claim that by understanding the “wildhoods” of other animals, we can develop better approaches “for compassionately and skillfully guiding human adolescents toward adulthood.”

The point is not to use non-human behaviour as an excuse for human misbehaviour. Lots of animals kill and exploit each other, but that shouldn’t make exploitation or murder acceptable. The point is to know which battles to pick. Making young school playtimes boring by quashing the least sign of competitiveness makes little sense, given the amount of biological machinery dedicated to judging and ranking in every animal species from humans to lobsters. On the other hand, every young animal, when it returns to its parents, gets a well-earned break from the “playground” — but young humans don’t. They’re now tied 24-7 to social media that prolongue, exaggerate and exacerbate the ranking process. Is the rise in mental health problems among the affluent young triggered by this added stress?

These are speculations and discussions for another book, for which Wildhood may prove a necessary and charming foundation. Introduced in the first couple of pages to California sea otters, swimming up to sharks one moment then fleeing from a plastic boat the next, the reader can’t help but recognise, in the animals’ overly bold and overly cautious behaviour, the gawkiness and tremor of their own adolescence.

All fall down

Talking to Scott Grafton about his book Physical Intelligence (Pantheon), 10 March 2020.

“We didn’t emerge as a species sitting around.”

So says University of California neuroscientist Scott Grafton in the introduction to his provoking new book Physical Intelligence. In it, Grafton assembles and explores all the neurological abilities that we take for granted — “simple” skills that in truth can only be acquired with time, effort and practice. Perceiving the world in three dimensions is one such skill; so is steadily carrying a cup of tea.

At UCLA, Grafton began his career mapping brain activity using positron emission tomography, to see how the brain learns new motor skills and recovers from injury or neurodegeneration. After a career developing new scanning techniques, and a lifetime’s walking, wild camping and climbing, Grafton believes he’s able to trace the neural architectures behind so-called “goal-directed behavior” — the business of how we represent and act physically in the world.

Grafton is interested in all those situations where “smart talk, texting, virtual goggles, reading, and rationalizing won’t get the job done” — those moments when the body accomplishes a complex task without much, if any, conscious intervention.. A good example might be bagging groceries. Suppose you are packing six different items into two bags. There are 720 possible ways to do this, and — assuming that like most people you want heavy items on the bottom, fragile items on the top, and cold items together — more than 700 of the possible solutions are wrong. And yet we almost always pack things so they don’t break or spoil, and we almost never have to agonise over the countless micro-decisions required to get the job done.

The grocery-bagging example is trivial, but often, what’s at stake in a task is much more serious — crossing the road, for example — and sometimes the experience required to accomplish it is much harder to come by. A keen hiker and scrambler, Grafton studs his book with first-hand accounts, at one point recalling how someone peeled off the side of a snow bank in front of him, in what escalated rapidly into a ghastly climbing accident. “At the spot where he fell,” he writes, “all I could think was how senseless his mistake had been. It was a steep section but entirely manageable. Knowing just a little bit more about how to use his ice axe, he could have readily stopped himself.”

To acquire experience, we have to have experiences. To acquire life-saving skills, we have to risk our lives. The temptation, now that we live most of our lives in urban comfort, is to create a world safe enough that we don’t need expose ourselves to such risks, or acquire such skills.

But this, Grafton tells me, when we speak on the phone, would be a big mistake. “If all you ever are walking on is a smooth, nice sidewalk, the only thing you can be graceful on is that sidewalk, and nothing else,” he explains. “And that sets you up for a fall.”

He means this literally: “The number one reason people are in emergency rooms is from what emergency rooms call ‘ground-level falls’. I’ve seen statistics which show that more and more of us are falling over for no very good reason. Not because we’re dizzy. Not because we’re weak. But because we’re inept. ”

For more than 1.3 million years of evolutionary time, hominids have lived without pavements or chairs, handling an uneven and often unpredictable environment. We evolved to handle a complex world, and a certain amount of constant risk. “Very enriched physical problem solving, which requires a lot of understanding of physical relationships, a lot of motor control, and some deftness in putting all those understandings together — all the while being constantly challenged by new situations — I believe this is really what drives brain networks towards better health,” Grafton says.

Our chat turns speculative. The more we removed risks and challenges from our everyday environment, Grafton suggests, the more we’re likely to want to complicate and add problems to the environment, to create challenges for ourselves that require the acquisition of unusual motor skills. Might this be a major driver behind cultural activities like music-making, craft and dance?

Speculation is one thing; serious findings are another. At the moment, Grafton is gathering medical and social data to support an anecdotal observation of his: that the experience of walking in the wild not only improves our motor abilities, but also promotes our mental health.

“A friend of mine runs a wilderness programme in the Sierra Nevada for at-risk teenagers,” he explains, “and one of the things he does is to teach them how to get by for a day or two in the wilderness, on their own. It’s life-transforming. They come out of there owning their choices and their behaviour. Essentially, they’ve grown up.”

“I heard the rustling of the dress for two whole hours”

By the end of the book I had come to understand why kindness and cruelty cannot vanquish each other, and why, irrespective of our various ideas about social progress, our sexual and gender politics will always teeter, endlessly and without remedy, between “Orwellian oppression and the Hobbesian jungle”…

Reading Strange Antics: A history of seduction by Clement Knox, 1 February 2020

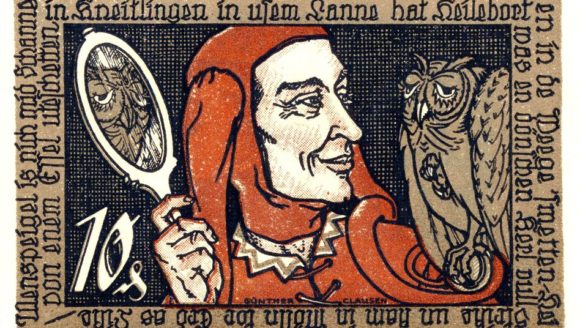

“If we’re going to die, at least give us some tits”

The Swedes are besieging the city of Brno. A bit of Googling reveals the year to be 1645. Armed with pick and shovel, the travelling entertainer Tyll Ulenspiegel is trying to undermine the Swedish redoubts when the shaft collapses, plunging him and his fellow miners into utter darkness. It’s difficult to establish even who is still alive and who is dead. “Say something about arses,” someone begs the darkness. “Say something about tits. If we’re going to die, at least give us some tits…”

Reading Daniel Kehlmann’s Tyll for the Times, 25 January 2020

Cutting up the sky

Reading A Scheme of Heaven: Astrology and the Birth of Science by Alexander Boxer

for the Spectator, 18 January 2020

Look up at sky on a clear night. This is not an astrological game. (Indeed, the experiment’s more impressive if you don’t know one zodiacal pattern from another, and rely solely on your wits.) In a matter of seconds, you will find patterns among the stars.

We can pretty much apprehend up to five objects (pennies, points of light, what-have-you) at a single glance. Totting up more than five objects, however, takes work. It means looking for groups, lines, patterns, symmetries, boundaries.

The ancients cut up the sky into figures, all those aeons ago, for the same reason we each cut up the sky within moments of gazing at it: because if we didn’t, we wouldn’t be able to comprehend the sky at all.

Our pattern-finding ability can get out of hand. During his Nobel lecture in 1973 the zoologist Konrad Lorenz recalled how he once :”… mistook a mill for a sternwheel steamer. A vessel was anchored on the banks of the Danube near Budapest. It had a little smoking funnel and at its stern an enormous slowly-turning paddle-wheel.”

Some false patterns persist. Some even flourish. And the brighter and more intellectually ambitious you are, the likelier you are to be suckered. John Dee, Queen Elizabeth’s court philosopher, owned the country’s largest library (it dwarfed any you would find at Oxford or Cambridge). His attempt to tie up all that knowledge in a single divine system drove him into the arms of angels — or at any rate, into the arms of the “scrier” Edward Kelley, whose prodigious output of symbolic tables of course could be read in such a way as to reveal fragments of esoteric wisdom.

This, I suspect, is what most of us think about astrology: that it was a fanciful misconception about the world that flourished in times of widespread superstition and ignorance, and did not, could not, survive advances in mathematics and science.

Alexander Boxer is out to show how wrong that picture is, and A Scheme of Heaven will make you fall in love with astrology, even as it extinguishes any niggling suspicion that it might actually work.

Boxer, a physicist and historian, kindles our admiration for the earliest astronomers. My favourite among his many jaw-dropping stories is the discovery of the precession of the equinoxes. This is the process by which the sun, each mid-spring and mid-autumn, rises at a fractionally different spot in the sky each year. It takes 26,000 years to make a full revolution of the zodiac — a tiny motion first detected by Hipparchus around 130 BC. And of course Hipparchus, to make this observation at all, “had to rely on the accuracy of stargazers who would have seemed ancient even to him.”

In short, a had a library card. And we know that such libraries existed because the “astronomical diaries” from the Assyrian library at Nineveh stretch from 652BC to 61BC, representing possibly the longest continuous research program ever undertaken in human history.

Which makes astrology not too shoddy, in my humble estimation. Boxer goes much further, dubbing it “the ancient world’s most ambitious applied mathematics problem.”

For as long as lives depend on the growth cycles of plants, the stars will, in a very general sense, dictate the destiny of our species. How far can we push this idea before it tips into absurdity? The answer is not immediately obvious, since pretty much any scheme we dream up will fit some conjunction or arrangement of the skies.

As civilisations become richer and more various, the number and variety of historical events increases, as does the chance that some event will coincide with some planetary conjunction. Around the year 1400, the French Catholic cardinal Pierre D’Ailly concluded his astrological history of the world with a warning that the Antichrist could be expected to arrive in the year 1789, which of course turned out to be the year of the French revolution.

But with every spooky correlation comes an even larger horde of absurdities and fatuities. Today, using a machine-learning algorithm, Boxer shows that “it’s possible to devise a model that perfectlly mimics Bitcoin’s price history and that takes, as its input data, nothing more than the zodiac signs of the planets on any given day.”

The Polish science fiction writer Stanislaw Lem explored this territory in his novel The Chain of Chance: “We now live in such a dense world of random chance,” he wrote in 1975, “in a molecular and chaotic gas whose ‘improbabilities’ are amazing only to the individual human atoms.” And this, I suppose, is why astrology eventually abandoned the business of describing whole cultures and nations (a task now handed over to economics, another largely ineffectual big-number narrative) and now, in its twilight, serves merely to gull individuals.

Astrology, to work at all, must assume that human affairs are predestined. It cannot, in the long run, survive the notion of free will. Christianity did for astrology, not because it defeated a superstition, but because it rendered moot astrology’s iron bonds of logic.

“Today,” writes Boxer, “there’s no need to root and rummage for incidental correlations. Modern machine-learning algorithms are correlation monsters. They can make pretty much any signal correlate with any other.”

We are bewitched by big data, and imagine it is something new. We are ever-indulgent towards economists who cannot even spot a global crash. We credulously conform to every algorithmically justified norm. Are we as credulous, then, as those who once took astrological advice as seriously as a medical diagnosis? Oh, for sure.

At least our forebears could say they were having to feel their way in the dark. The statistical tools you need to sort real correlations from pretty patterns weren’t developed until the late nineteenth century. What’s our excuse?

“Those of us who are enthusiastic about the promise of numerical data to unlock the secrets of ourselves and our world,” Boxer writes, “would do well simply to acknowledge that others have come this way before.”

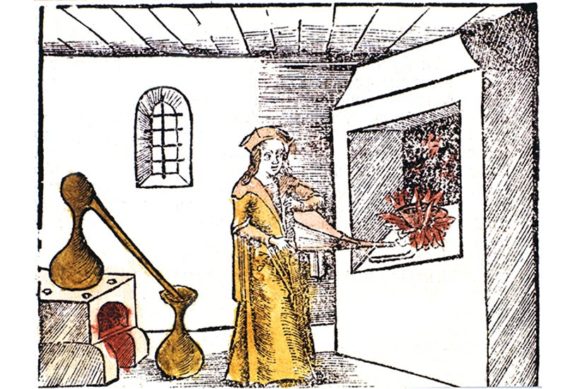

‘God knows what the Chymists mean by it’

Reading Antimony, Gold, and Jupiter’s Wolf: How the Elements Were Named, by

Peter Wothers, for The Spectator, 14 December 2019

Here’s how the element antimony got its name. Once upon a time (according to the 17th-century apothecary Pierre Pomet), a German monk (moine in French) noticed its purgative effects in animals. Fancying himself as a physician, he fed it to “his own Fraternity… but his Experiment succeeded so ill that every one who took of it died. This therefore was the reason of this Mineral being call’d Antimony, as being destructive of the Monks.”

If this sounds far-fetched, the Cambridge chemist Peter Wothers has other stories for you to choose from, each more outlandish than the last. Keep up: we have 93 more elements to get through, and they’re just the ones that occur naturally on Earth. They each have a history, a reputation and in some cases a folklore. To investigate their names is to evoke histories that are only intermittently scientific. A lot of this enchanting, eccentric book is about mining and piss.

The mining:

There was no reliable lighting or ventilation; the mines could collapse at any point and crush the miners; they could be poisoned by invisible vapours or blown up by the ignition of pockets of flammable gas. Add to this the stifling heat and the fact that some of the minerals themselves were poisonous and corrosive, and it really must have seemed to the miners that they were venturing into hell.

Above ground, there were other difficulties. How to spot the new stuff? What to make of it? How to distinguish it from all the other stuff? It was a job that drove men spare. In a 1657 Physical Dictionary the entry for Sulphur Philosophorum states simply: ‘God knows what the Chymists mean by it.’

Today we manufacture elements, albeit briefly, in the lab. It’s a tidy process, with a tidy nomenclature. Copernicum, einsteinium berkelium: neologisms as orderly and unevocative as car marques.

The more familiar elements have names that evoke their history. Cobalt, found in

a mineral that used to burn and poison miners, is named for the imps that, according to the 16th-century German Georgius Agricola ‘idle about in the shafts and tunnels and really do nothing, although they pretend to be busy in all kinds of labour’. Nickel is kupfernickel, ‘the devil’s copper’, an ore that looked like valuable copper ore but, once hauled above the ground, appeared to have no value whatsoever.

In this account, technology leads and science follows. If you want to understand what oxygen is, for example, you first have to be able to make it. And Cornelius Drebbel, the maverick Dutch inventor, did make it, in 1620, 150 years before Joseph Priestley got in on the act. Drebbel had no idea what this enchanted stuff was, but he knew it sweetened the air in his submarine, which he demonstrated on the Thames before King James I. Again, if you want a good scientific understanding of alkalis, say, then you need soap, and lye so caustic that when a drunk toppled into a pit of the stuff ‘nothing of him was found but his Linnen Shirt, and the hardest Bones, as I had the Relation from a Credible Person, Professor of that Trade’. (This is Otto Tachenius, writing in 1677. There is lot of this sort of thing. Overwhelming in its detail as it can be, Antimony, Gold, and Jupiter’s Wolf is wickedly entertaining.)

Wothers does not care to hold the reader’s hand. From page 1 he’s getting his hands dirty with minerals and earths, metals and the aforementioned urine (without which the alchemists, wanting chloride, sodium, potassium and ammonia, would have been at a complete loss) and we have to wait till page 83 for a discussion of how the modern conception of elements was arrived at. The periodic table doesn’t arrive till page 201 (and then it’s Mendeleev’s first table, published in 1869). Henri Becquerel discovers radioactivity barely four pages before the end of the book. It’s a surprising strategy, and a successful one. Readers fall under the spell of the possibilities of matter well before they’re asked to wrangle with any of the more highfalutin chemical concepts.

In 1782, Louis-Bernard Guyton de Morveau published his Memoir upon Chemical Denominations, the Necessity of Improving the System, and the Rules for Attaining a Perfect Language. Countless idiosyncracies survived his reforms. But chemistry did begin to acquire an orderliness that made Mendeleev’s towering work a century later — relating elements to their atomic structure — a deal easier.

This story has an end. Chemistry as a discipline is now complete. All the major problems have been solved. There are no more great discoveries to be made. Every chemical reaction we do is another example of one we’ve already done. These days, chemists are technologists: they study spectrographs, and argue with astronomers about the composition of the atmospheres around planets orbiting distant stars; they tinker in biophysics labs, and have things to say about protein synthesis. The heroic era of chemical discovery — in which we may fondly recall Gottfried Leibniz extracting phosphorus from 13,140 litres of soldiers’ urine — is past. Only some evocative words remain; and Wothers unpacks them with infectious enthusiasm, and something which in certain lights looks very like love.