Reading Gerd Gigerenzer’s How to Stay Smart in a Smart World for the Times, 26 February 2022

Some writers are like Moses. They see further than everybody else, have a clear sense of direction, and are natural leaders besides. These geniuses write books that show us, clearly and simply, what to do if we want to make a better world.

Then there are books like this one — more likeable, and more honest — in which the author stumbles upon a bottomless hole, sees his society approaching it, and spends 250-odd pages scampering about the edge of the hole yelling at the top of his lungs — though he knows, and we know, that society is a machine without brakes, and all this shouting comes far, far too late.

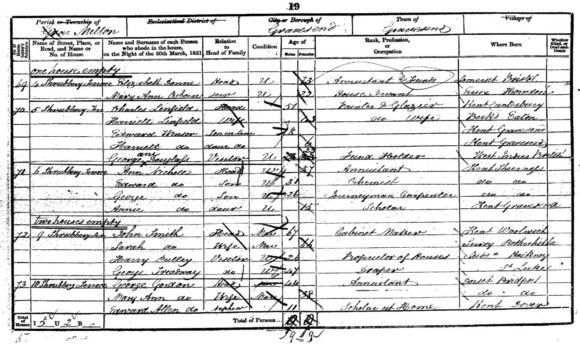

Gerd Gigerenzer is a German psychologist who has spent his career studying how the human mind comprehends and assesses risk. We wouldn’t have lasted even this long as a species if we didn’t negotiate day-to-day risks with elegance and efficiency. We know, too, that evolution will have forced us formulate the quickest, cheapest, most economical strategies for solving our problems. We call these strategies “heuristics”.

Heuristics are rules of thumb, developed by extemporising upon past experiences. They rely on our apprehension of, and constant engagement in, the world beyond our heads. We can write down these strategies; share them; even formalise them in a few lines of light-weight computer code.

Here’s an example from Gigerenzer’s own work: Is there more than one person in that speeding vehicle? Is it slowing down as ordered? Is the occupant posing any additional threat?

Abiding by the rules of engagement set by this tiny decision tree reduces civilian casualties at military checkpoints by more than sixty per cent.

We can apply heuristics to every circumstance we are likely to encounter, regardless of the amount of data available. The complex algorithms that power machine learning, on the other hand, “work best in well-defined, stable situations where large amounts of data are available”.

What happens if we decide to hurl 200,000 years of heuristics down the toilet, and kneel instead at the altar of occult computation and incomprehensibly big data?

Nothing good, says Gigerenzer.

How to Stay Smart is a number of books in one, none of which, on its own, is entirely satisfactory.

It is a digital detox manual, telling us how our social media are currently weaponised, designed to erode our cognition (but we can fill whole shelves with such books).

It punctures many a rhetorical bubble around much-vaunted “artificial intelligence”, pointing out how easy it is to, say, get a young man of colour charged without bail using proprietary risk-assessment software. (In some notorious cases the software had been trained on, and so was liable to perpetuate, historical injustices.) Or would you prefer to force an autonomous car to crash by wearing a certain kind of T-shirt? (Simple, easily generated pixel patterns cause whole classes of networks to draw bizarre inferential errors about the movement of surrounding objects.) This is enlightening stuff, or it would be, were the stories not quite so old.

One very valuable section explains why forecasts derived from large data sets become less reliable, the more data they are given. In the real world, problems are unbounded; the amount of data relevant to any problem is infinite. This is why past information is a poor guide to future performance, and why the future always wins. Filling a system with even more data about what used to happen will only bake in the false assumptions that are already in your system. Gigerenzer goes on to show how vested interests hide this awkward fact behind some highly specious definitions of what a forecast is.

But the most impassioned and successful of these books-within-a-book is the one that exposes the hunger for autocratic power, the political naivety, and the commercial chicanery that lie behind the rise of “AI”. (Healthcare AI is a particular bugbear: the story of how the Dutch Cancer Society was suckered into funding big data research, at the expense of cancer prevention campaigns that were shown to work, is especially upsetting).

Threaded through this diverse material is an argument Gigerenzer maybe should have made at the beginning: that we are entering a new patriarchal age, in which we are obliged to defer, neither to spiritual authority, nor to the glitter of wealth, but to unliving, unconscious, unconscionable systems that direct human action by aping human wisdom just well enough to convince us, but not nearly well enough to deliver happiness or social justice.

Gigerenzer does his best to educate and energise us against this future. He explains the historical accidents that led us to muddle cognition with computation in the first place. He tells us what actually goes on, computationally speaking, behind the chromed wall of machine-learning blarney. He explains why, no matter how often we swipe right, we never get a decent date; he explains how to spot fake news; and he suggests how we might claw our minds free of our mobile phones.

But it’s a hopeless effort, and the book’s most powerful passages explain exactly why it is hopeless.

“To improve the performance of AI,” Gigerenzer explains, “one needs to make the physical environment more stable and people’s behaviour more predictable.”

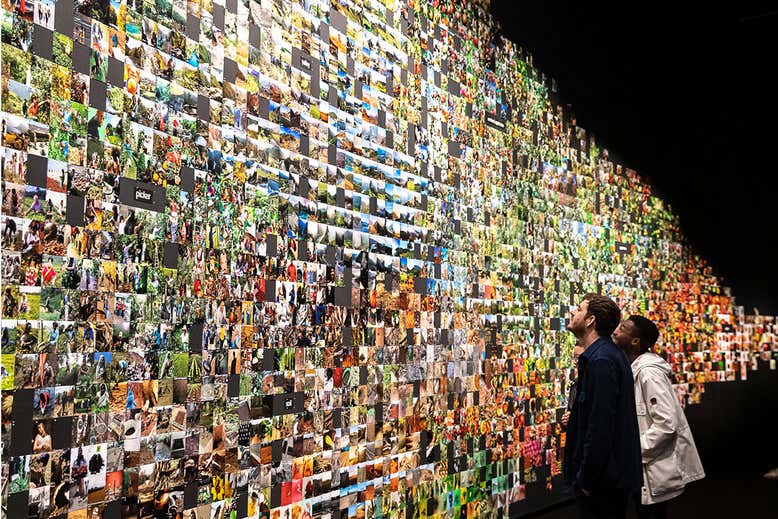

In China, the surveillance this entails comes wrapped in Confucian motley: under its social credit score system, sincerity, harmony and wealth creation trump free speech. In the West the self-same system, stripped of any ethic, is well advanced thanks to the efforts of the credit-scoring industry. One company, Acxiom, claims to have collected data from 700 million people worldwide, and up to 3000 data points for each individual (and quite a few are wrong).

That this bumper data harvest is an encouragement to autocratic governance hardly needs rehearsing, or so you would think.

And yet, in a 2021 study of 3,446 digital natives, 96 per cent “do not know how to check the trustworthiness of sites and posts.” I think Gigerenzer is pulling his punches here. What if, as seems more likely, 96 per cent of digital natives can’t be bothered to check the trustworthiness of sites and posts?

Asked by the author in a 2019 study how much they would be willing to spend each month on ad-free social media — that is, social media not weaponised against the user — 75 per cent of respondents said they would not pay a cent.

Have we become so trivial, selfish, short-sighted and penny-pinching that we deserve our coming subjection? Have we always been servile at heart, for all our talk of rights and freedoms; desperate for some grown-up come tug at our leash, and bring us to heal?

You may very well think so. Gigerenzer could not possibly comment. He does, though, remark that operant conditioning (the kind of learning explored in the 1940s by behaviourist B F Skinner, that occurs through rewards and punishments) has never enjoyed such political currency, and that “Skinner’s dream of a society where the behaviour of each member is strictly controlled by reward has become reality.”

How to Stay Smart in a Smart World is an optimistic title indeed for a book that maps, with passion and precision, a hole down which we are already plummeting.