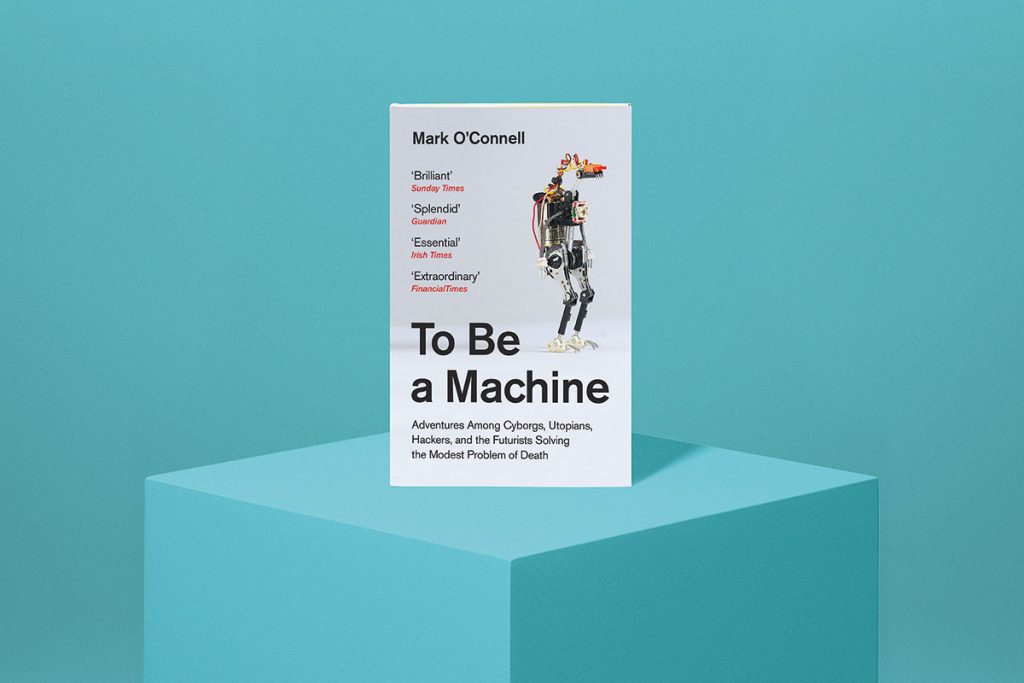

Mark O’Connell’s To Be a Machine, a travelogue of strange journeys and bizarre encounters among transhumanists, won the 2018 Wellcome Book Prize. Wearing my New Scientist hat I asked O’Connell how he managed to give transhumanism a human face – despite his own scepticism.

Has transhumanism ever made personal sense to you?

Transhumanism’s critique of the human condition, its anxiety around having to die — that’s something I have some sympathy with, for sure, and that’s where the book began. The idea was for the door to some kind of conversion to be always open. But I was never really convinced that the big ideas in transhumanism, things like mind-uploading and so on, were really plausible. The most interesting question for me was, “Why would anyone want this?”

A lot of transhumanist thought is devoted to evading death. Do the transhumanists you met get much out of life?

I wouldn’t want to be outright prescriptive about what it means to live a meaningful life. I’m still trying to figure that one out myself. I think if you’re so devoted to the idea that we can outrun death, and that death makes life utterly meaningless, then you are avoiding the true animal nature of what it means to be human. But I find myself moving back and forth between that position and one that says, you know what, these people are driven by a deep, Promethean project. I don’t have the deep desire to shake the world to its core that these people have. In that sense, they’re living life to its absolute fullest.

What most sticks in your mind from your researches for the book?

The place that sticks in my mind most clearly is Alcor’s cryogenic life extension facility. In terms of just the visuals, it’s bizarre. You’re walking around what’s known as a “patient care bay”, among these gigantic stainless steel cylinders filled with corpses and severed heads that they’re going to unfreeze once a cure for death is found. The thing that really grabbed me was the juxtaposition between the sci-fi level of the thing and the fact that it was situated in a business park on the outskirts of Phoenix, next door to Big D’s Floor Covering Supplies and a tile showroom.

They do say the future arrives unevenly…

I think we’re at a very particular cultural point in terms of our relationship to “the future”. We aren’t really thinking of science as this boundless field of possibility any more, and so it seems bit of a throwback, like something from an Arthur C. Clarke story. It’s like the thing with Elon Musk. Even the global problems he identifies — rogue AI, and finding a new planet that we can live on to perpetuate the species — seem so completely removed from actual problems that people are facing right now that they’re absurd. A handful of people who seem to wield almost infinite technological resources are devoting themselves to completely speculative non-problems. They’re not serious, on some basic level.

Are you saying transhumanism is a product of an unreal Silicon Valley mentality?

The big cultural influence over transhumanism, the thing that took it to the next level, seems to have been the development of the internet in the late 1990s. That’s when it really became a distinct social movement, as opposed to a group of more-or-less isolated eccentric thinkers and obsessives.

But it’s very much a global movement. I met a lot of Europeans – Russia in particular has a long prehistory of attempts to evade death. But most transhumanists have tended to end up in the US and specifically in Silicon Valley. I suppose that’s because these kinds of ideas get most traction there. You don’t get people laughing at you when you mention want to live forever.

The one person I really found myself grappling with, in the most profound and unsettling way, was Randal Koene. It’s his idea of uploading the human mind to a computer that I find most deeply troubling and offensive, and kind of absurd. As a person and as a communicator, though, Koene was very powerful. A lot of people who are pushing forward these ideas — people like Ray Kurzweil — tend to be impresarios. Randal was the opposite. He was very quietly spoken, very humble, very much the scientist. There were moments he really pushed me out of my scepticism – and I liked him.

Is transhumanism science or religion?

It’s not a religion: there’s no God, for instance. But at the same time I think it very obviously replaces religion in terms of certain basic yearnings and anxieties. The anxiety about death is the obvious one.

There is a very serious religious subtext to all of transhumanism’s aspirations. And at the same time, transhumanists absolutely reject that thinking, because it tends to undermine their perception of themselves as hardline rationalists and deeply science-y people. Mysticism is quite toxic to their sense of themselves.

Will their future ever arrive?

On one level, it’s already happening. We’re walking round in this miasma of information and data, almost in a state of merger with technology. That’s what we’re grappling with as a culture. But if that future means an actual merger of artificial intelligence and human intelligence, I think that’s a deeply terrifying idea, and not, touch wood, something that is ever going to happen.

Should we be worried?

That is why I’m now writing about a book about apocalyptic anxieties. It’s a way to try to get to grips with our current political and cultural moment.

To Be a Machine: Adventures among cyborgs, utopians, hackers, and the futurists solving the modest problem of death

Mark O’Connell

Granta/Doubleday