Heteropoda davidbowie is a species of huntsman spider. Though rare, it has been found in parts of Malaysia, Singapore, Indonesia and possibly Thailand. (The uncertainty arises because it’s often mistaken for a similar-looking species, the Heteropoda javana.) In 2008 a German collector sent photos of his unusual-looking “pet” to Peter Jäger, an arachnologist at the Senckenberg Research Institute in Frankfurt. Consequently, and in common with most other living finds, David Bowie’s spider was discovered twice: once in the field, and once in the collection.

Bowie’s spider is famous, but not exceptional. Jäger has discovered more than 200 species of spider in the last decade, and names them after politicians, comedians and rock stars to highlight our ecological plight. Other researchers find more pointed ways to further the same cause. In the first month of Donald Trump’s administration, Iranian-Canadian entomologist Vazrick Nazari discovered a moth with a head crowned with large, blond comb-over scales. There’s more to Neopalpa donaldtrumpi than a striking physical resemblance: it lives in a federally protected area around where the border wall with Mexico is supposed to go. Cue headlines.

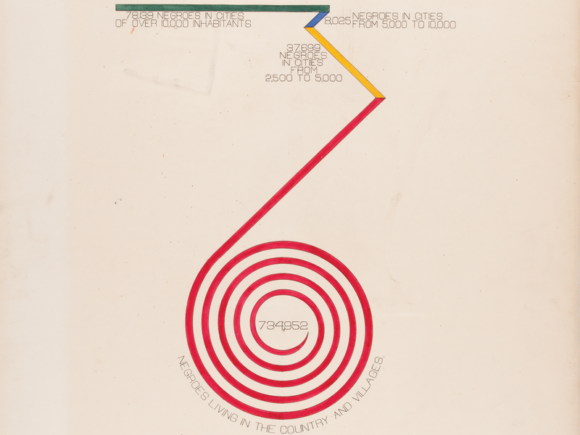

Species are becoming extinct 100 times faster than they did before modern humans arrived. This makes reading a book about the naming of species a curiously queasy affair. Nor is there much comfort to be had in evolutionary ecologist Stephen Heard’s observation that, having described 1.5 million species, we’ve (at very best) only recorded half of what’s out there. There is, you may recall, that devastating passage in Cormac McCarthy’s western novel Blood Meridian in which Judge Holden meticulously records a Native American artifact in his sketchbook — then destroys it. Given that to discover a species you must, by definition, invade its environment, Holden’s sketch-and-burn habit appears to be a painfully accurate metonym for what the human species is doing to the planet. Since the 1970s (when there used to be twice as many wild animals than there are now) we’ve been discovering and endangering new species in almost the same breath.

Richard Spruce, one of the Victorian era’s great botanical explorers, who spent 15 years exploring the Amazon from the Andes to its mouth, is a star of this short, charming book about how we have named and ordered the living world. No detail of his bravery, resilience and grace under pressure come close to the eloquence of this passing quotation, however: “Whenever rains, swollen streams, and grumbling Indians combined to overwhelm me with chagrin,” he wrote in his account of his travels, “I found reason to thank heaven which had enabled me to forget for the moment all my troubles in the contemplation of a simple moss.”

Stephen Heard, an evolutionary ecologist based in Canada, explains how extraordinary amounts of curiosity have been codified to create a map of the living world. The legalistic-sounding codes by which species are named are, it turns out, admirably egalitarian, ensuring that the names amateurs give species are just as valid as those of professional scientists.

Formal names are necessary because of the difficulty we have in distinguishing between similiar species. Common names run into this difficulty all the time. There too many of them, so the same species gets different names in different languages. At the same time, there aren’t enough of them, so that, as Heard points out, “Darwin’s finches aren’t finches, African violets aren’t violets, and electric eels aren’t eels;” Robins, blackbirds and badgers are entirely different animals in Europe and North America; and virtually every flower has at one time or another been called a daisy.

Also names tend, reasonably enough, to be descriptive. This is fine when you’re distinguishing between, say, five different types of fish When there are 500 different fish to sort through, however, absurdity beckons. Heard lovingly transcribes the pre-Linnaean species name of the English whiting, formulated around 1738: “Gadus, dorso tripterygio, ore cirrato, longitudine ad latitudinem tripla, pinna ani prima officulorum trigiata“. So there.

It takes nothing away from the genius of Swedish physician Carl Linnaeus, who formulated the naming system we still use today, to say that he came along at the right time. By Linnaeus’s day, it was possible to look things up. Advances in printing and distribution had made reference works possible. Linnaeus’s innovation was to decouple names from descriptions. And this, as Heard reveals in anecdote after anecdote, is where the fun now slips in: the mythopoeic cool of the baboon Papio anubis, the mischevious smarts of the beetle Agra vation, the nerd celebrity of lemur Avahi cleesi.

Hearst’s taxonomy of taxonomies makes for somewhat thin reading; this is less of a book, more like a dozen interesting magazine articles flying in close formation. But its close focus, bringing to life minutiae of both the living world and the practice of science, is welcome.

I once met Michael Land, the neurobiologist who figured out how the lobster’s eye works. He told me that the trouble with big ideas is that they get in the way of the small ones. Heard’s lesson, delivered with such a light touch, is the same. The joy, and much of the accompanying wisdom, lies in the detail.